When AI Steps Off the Cloud: Embedded Intelligence for the Next Generation of Products

When AI Steps Off the Cloud: Embedded Intelligence for the Next Generation of Products

Artificial intelligence has spent most of its life in the cloud and in high-power processors. For years, whether for voice recognition, image classification, or predictive analytics, running a model meant shipping data off-device and waiting for a server to respond. Later, Edge AI emerged as a new frontier: high-performance inference at or near the data source. Gateways, cameras, and industrial controllers began running models locally using powerful SoCs or GPUs. Edge AI brought latency down and privacy up, but it still demanded significant power, cost, and cooling.

Now, a new frontier is emerging: Embedded AI. It takes the same intelligence that once required a data centre or a multicore ARM processor, and runs it on low-cost, ultra-low-power microcontrollers. This is not just a scaled-down version of Edge AI; it’s a fundamentally different approach that’s reshaping what kinds of products can include machine learning.

Edge AI vs. Embedded AI: A Clear Distinction

The term Edge AI often gets used loosely, but the technical distinction matters:

Edge AI is about bringing intelligence closer to the data source. Embedded AI is about putting intelligence inside the device itself.

The Enablers: Hardware and Model Compression

Recent microcontrollers now include dedicated AI hardware, including DSPs, NPUs, or SIMD extensions, that handle convolution, matrix multiplication, and activation functions efficiently. For example:

- STMicroelectronics STM32N6 pairing a Cortex-M55 core with vector extensions and an integrated NPU.

- NXP i.MX RT700 integrating a DSP and NPU with dual Cortex-M33 cores

- Renesas RA8P1 combining Cortex-M85 with vector extensions and M33 cores with an NPU.

Using its NPU, the STM32N6 outperforms a Raspberry Pi 4 and STM32H7 microcontroller by factors of ~7x and more than 100x respectively, running the same TinyYolo V2 object detection model.

On the model side, techniques like quantization (8-bit or 4-bit), pruning, and knowledge distillation reduce memory footprint by orders of magnitude while preserving usable accuracy.

The result is real-time inference (latency of tens of milliseconds) at single-digit watts of power, with the benefits of low-power sleep and fast wake-up that come from using a microcontroller. This enables virtually instant response time and local analysis on devices that can run for months on a single battery.

Implications for Product Design

From a product design and software architecture perspective, embedded AI unlocks new capabilities:

- Real-Time Local Decision-Making

No network latency, no connectivity requirement. Products can make immediate decisions, whether detecting a wake word, identifying a gesture, or recognizing an anomaly. This fundamentally changes the system architecture: less dependence on cloud infrastructure, and more autonomy at the node.

- Privacy and Data Sovereignty

Processing stays local, reducing data exposure risk and simplifying compliance with privacy frameworks (GDPR, HIPAA, etc). For medical devices and consumer products alike, this “privacy by architecture” approach builds both trust and resilience.

- Energy and Cost Efficiency

Embedded AI eliminates the cloud processing overhead, data transfer costs, and power draw of high-performance hardware. It makes intelligent features viable in cost-sensitive markets, from smart tools and toys to large-scale sensor deployments.

- Resilient and Scalable Architectures

Designers can now distribute intelligence across many low-power nodes instead of centralizing it. This creates systems that are more fault-tolerant, scalable, and energy-efficient, and is ideal for environments where connectivity is intermittent or constrained.

Designing for Embedded AI

For teams used to cloud or edge systems, designing for embedded AI requires new thinking:

- Model Selection and Training: Start with architectures optimized for MCU deployment (e.g., MobileNetV3, TinyML).

- Optimization: Use TensorFlow Lite Micro, CMSIS-NN, or vendor-specific SDKs to quantize and compile models.

- Memory Mapping: Carefully partition flash, SRAM, and external memory for model weights and intermediate buffers.

- Energy Profiling: Integrate inference power consumption measurements early to manage duty cycles and standby modes.

- UX and Behaviour Design: Design around probabilistic outputs; models may be good, not perfect.

This discipline merges embedded firmware engineering with machine learning: a hybrid skillset that’s increasingly valuable for modern product development.

The Future: Embedded Intelligence Everywhere

Embedded AI closes the gap between perception and action. Instead of devices that report data, we can now design devices that understand it locally, privately, and efficiently.

As the technology matures, we’ll see intelligence migrate from servers to sensors, from the edge to the endpoint. That shift doesn’t just make products smarter: it makes them more responsive, sustainable, and human.

If you’re exploring how AI could make your product smarter, without the cost, power, and privacy challenges of traditional edge or cloud approaches, we’d love to collaborate.

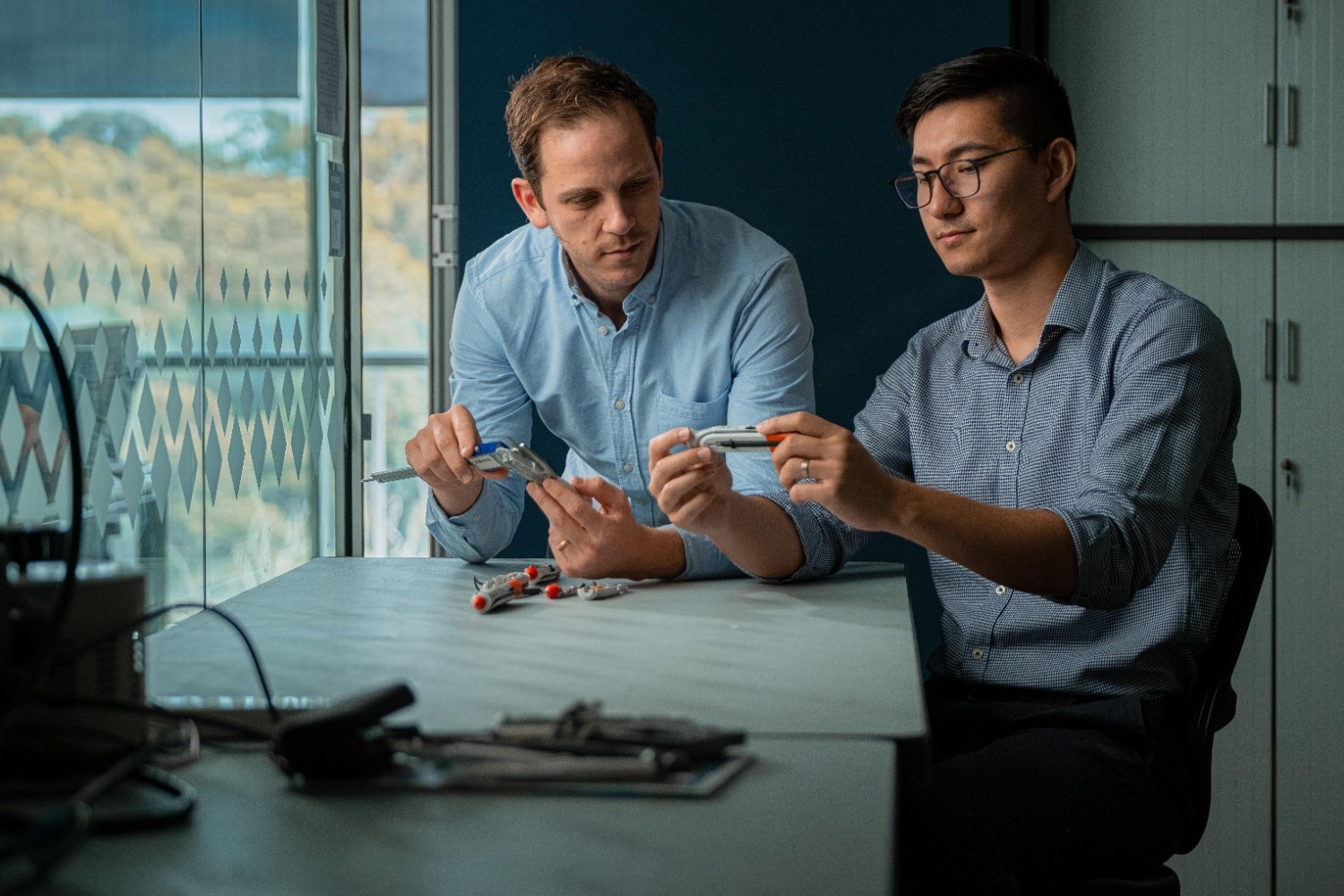

At Ingenuity Design Group, we help companies move from concept to prototype to production, integrating embedded AI where it truly adds value.

Let’s design the next generation of intelligent devices - ones that think locally and act instantly.